Efficient 360 Video Streaming to Head-Mounted Displays in Virtual and Augment Reality

Watching a live broadcast of an event, such as a football match or a music concert, with traditional planar televisions or monitors is a passive experience. Over the past years, Virtual Reality (VR) products, such as Head-Mounted Displays (HMDs), become widely available. Many companies in the computer industry release their HMDs, such as Oculus Rift DK2, HTC Vive, and Samsung Gear VR. These products offer viewers wider Field-of-Views (FoVs) and provide more immersive experience than traditional ones. Besides, lots of 360° cameras are also introduced. For instance, Ricoh Theta S, Luna 360 VR, and Samsung Gear 360. With the growing popularity of consumer VR products, viewers are able to watch 360° videos. On top of that, major multimedia streaming service providers, such as Facebook and YouTube all support 360° video streaming for VR content.

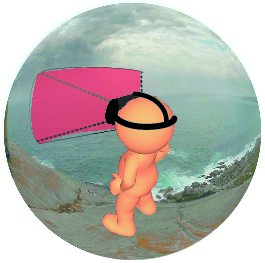

Nowadays, majority of global Internet traffic is due to video data (Global Internet Phenomena). Streaming 360° videos further increases the Internet traffic amount, and becomes a hot research topic. Leveraging commodity HMDs for 360° video streaming is, however, very challenging for two reasons. First, 360° videos, illustrated in Fig. 1(a), contain much more information than conventional videos, and thus are much larger in resolutions and file sizes. Fig. 1(b) reveals that, with HMDs, each viewer only gets to see a small part of the whole video. Therefore, sending the whole 360° video in full resolution may lead to waste of resources, such as network bandwidth, processing power, and storage space. Another way to stream 360° videos to HMDs is to only stream the current FoV of the viewers. We emphasize current, because the FoV changes as the viewer’s head and eyes move, which leads to the following main challenge: which FoV should we transfer to meet the viewer’s needs in the next moment? This challenge makes designing a real-time 360° video streaming system to HMDs quite tricky, especially because most video streaming solutions nowadays deliver videos in segments lasting for a few seconds.

Goals and Overview

- Design and develop a tile-based 360° video streaming system with viewers using HMDs

- Train and fine-tune new algorithms for 360° video streaming (e.g., viewed tile prediction algorithms)

- Collect a 360° video viewing Dataset, which contains content and sensor data, with viewers using HMDs

- New Quality-of-Experience (QoE) metrics designed for 360° video streaming to HMDs

- Optimal bitrate allocation algorithm for 360° video streaming

- Rate-Distortion (R-D) optimization for 360° video streaming to HMDs

Research Activities

Performance Measurements of 360° Video Streaming to HMDs over 4G Cellular Networks

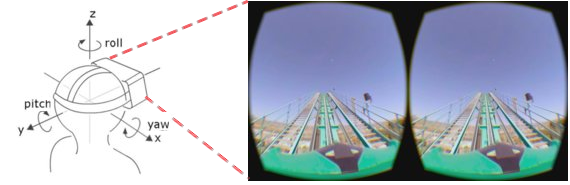

Streaming 360° videos further increases the Internet traffic amount. In particular, streaming videos coded in 4K or higher resolutions leads to insufficient bandwidth and overloaded decoder. As shown in Fig. 2, when watching 360° videos, a viewer wearing an HMD rotates his/her head to actively change the viewing orientation. Viewing orientation can be described by yaw, pitch, and roll, which correspond to rotating along x, y, and z axes. A viewer with HMD only gets to see a small part of the whole video. Most of the 360° videos won’t be viewed by a viewer, and thus streaming the whole 360° videos may be unnecessary.

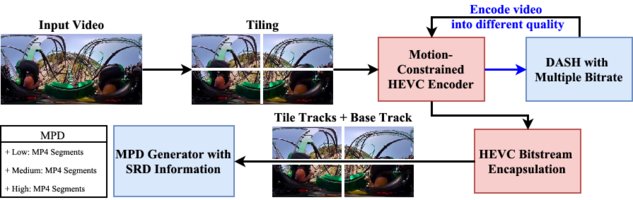

To solve this problem, Fig. 3 shows that a video is split into tiles of sub-videos, which are then encoded by HEVC (High Efficiency Video Coding) codec into video bitstreams. Then, we stream them using MPEG Dynamic Adaptive Streaming over HTTP (DASH), an adaptive streaming technology for delivering videos over the Internet. For DASH streaming systems, each tile is encoded into multiple versions at different bitrates. This provides the ability for a client to switch among different bitrates or quality levels. Having multiple tiles allows each client to only request and decode those tiles that will be watched, in order to conserve resources. However, splitting a video into tiles may reduce the coding efficiency, compared to a single non-tiled video.

Therefor, we design several experiments for quantifying the performance of 360° video streaming over a real cellular network on our campus. In particular, we investigate diverse impacts of tile streaming over 4G networks, such as the coding efficiency, the potential of saving bandwidth, and the number of the supportable clients. Our experiments make several interesting findings, for example, (i) only streaming the tiles viewed by the viewer achieves bitrate reduction by up to 80%, and (ii) the coding efficiency of 3×3 tiled videos may be higher than non-tiled videos at higher bitrates.

View details »

Fixation Prediction for 360° Video Streaming

Leveraging commodity HMDs for 360° video streaming is, however, very challenging for two reasons. First, 360° videos contain much more information than conventional videos, and thus are much larger in resolutions and file sizes. With HMDs, each viewer only gets to see a small part of the whole video. Therefore, sending the whole 360° video in full resolution may lead to waste of resources, such as network bandwidth, processing power, and storage space. Another way to stream 360° videos to HMDs is to only stream the current FoV of the viewer. We emphasize current, because the FoV changes as the viewer’s head and eyes move, which leads to the following main challenge: which FoV should we transfer to meet the viewer’s needs in the next moment.

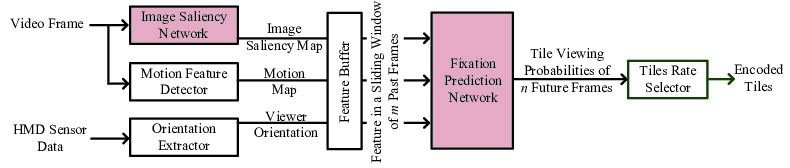

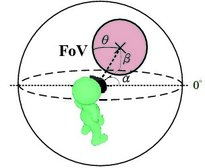

Fig. 4 presents our proposed architecture of a 360° streaming server, in which we focus on the software components related to fixation prediction. We have identified two types of content-related features: image saliency map, motion map, and sensor-related features, such as orientations and angular speed, from HMDs. The video frames are sent to the image saliency network and motion feature detector for generating the image saliency map and the motion map, respectively. Generating these two maps is potentially resource demanding, and we assume that they are created offline for pre-recorded videos. Fig. 5 presents the FoV model of HMDs. The viewer stands at the center of the sphere. Let α and β be the yaw and pitch, which are reported from the sensors equipped by HMDs. The HMD sensor data are transmitted to the orientation extractor to derive the viewer orientations. The feature buffer maintains a sliding window that stores the latest image saliency maps, motion maps, and viewer orientations as the inputs of fixation prediction network. The video fixation prediction network predicts the future viewing probability of each tile. The tile rate selector optimally selects the rates of the encoded video tiles.

View details »

360° Video Viewing Dataset in Head-Mounted Virtual Reality

Using conventional displays to watch 360° videos is often less intuitive, while recently released HMDs, such as Oculus Rift, HTC Vive, Samsung Gear VR, offer wider Field-of-Views (FoVs) and thus more immersive experience. Since a viewer never sees a whole 360° video, streaming the 360° video in its full resolution wastes resources, including bandwidth, storage, and computation. Therefore, each 360° video is often split into grids of sub-images, called tiles. With tiles, an optimized 360° video streaming system to HMDs would strive to stream only those tiles that fall in the viewer’s FoV. However, getting to know each viewer’s FoVs at any moment of every 360° video is not an easy task. To better address the challenge, a large set of the content and sensor data from viewers watching 360° videos with HMDs is crucial.

To overcome such limitation, and promote reproducible research, we build up our own 360° video testbed for collecting traces from real viewers watching 360° videos using HMDs.

The resulting dataset can be used to, for example, predict which parts of 360° videos attract viewers to watch the most. The dataset, however, can also be leveraged in various novel applications in a much broader scope.

View details »

Publications

Streaming

- Ching-Ling Fan, “Optimal 360°Video Streaming to Head-Mounted Virtual Reality,” in Proc. of IEEE International Conference on Pervasive Computing and Communications (PerCom’18) Ph.D. Forum, Athens, Greece, March 2018. (Accepted to appear.)

- Ching-Ling Fan, Jean Lee, Wen-Chih Lo, Chun-Ying Huang, Kuan-Ta Chen, and Cheng-Hsin Hsu, “Fixation Prediction for 360° Video Streaming in Head-Mounted Virtual Reality,” in Proc. of the 27th Workshop on Network and Operating Systems Support for Digital Audio and Video (NOSSDAV’17), Taipei, Taiwan, June 2017, pp. 67-72.

- Wen-Chih Lo, Ching-Ling Fan, Shou-Cheng Yen, and Cheng-Hsin Hsu, “Performance Measurements of 360◦ Video Streaming to Head-Mounted Displays Over Live 4G Cellular Networks,” in Proc. of Asia-Pacific Network Operations and Management Symposium (APNOMS’17), Seoul, South Korea, October 2017, Student Travel Grant, pp. 1–6.

Dataset

- Wen-Chih Lo, Ching-Ling Fan, Jean Lee, Chun-Ying Huang, Kuan-Ta Chen, and Cheng-Hsin Hsu, “360° Video Viewing Dataset in Head-Mounted Virtual Reality,” in Proc. of the 8th ACM on Multimedia Systems Conference (MMSys’17), Taipei, Taiwan, June 2017, Dataset Paper, pp. 211-216.

Presentations

- “Performance Measurements of 360◦ Video Streaming to Head-Mounted Displays Over Live 4G Cellular Networks”

- “Fixation Prediction for 360° Video Streaming in Head-Mounted Virtual Reality”

- “360° Video Viewing Dataset in Head-Mounted Virtual Reality”

- “Optimizing 360° Video Streaming to Head-Mounted Virtual Reality”

360° Video Viewing Dataset

This dataset consists of the content (10 videos from YouTube) and sensory data (50 subjects) of 360-degree videos to HMD.

- The folder information:

- Saliency: contains the videos with the saliency map of each frame based on Cornia’s work, where the saliency maps indicate the attraction level of the original video frame

- Motion: contains the videos with the optical flow analyzed from each consecutive frames, where the optical flow indicates the relative motions between the objects in 360-degree videos and the viewer

- Raw: contains the raw sensing data (raw x, raw y, raw z, raw yaw, raw roll, and raw pitch) with timestamps captured when the viewers are watching 360-degree videos using OpenTrack

- Orientation: contains the orientation data (raw x, raw y, raw z, raw yaw, raw roll, and raw pitch), which have been aligned with the time of each frame of the video. The calibrated orientation data (cal. yaw, cal. pitch, and cal. roll) are provided as well

- Tile: contains the tile numbers overlapped with the Field-of-View (FoV) of the viewer according to the orientation data, where the tile size is 192×192. Knowing the tiles that are overlapped with the FoV of the viewer is useful for optimizing 360-degree video to HMD streaming system, for example, the system can only stream those tiles to reduce the required bandwidth or allocate higher bitrate to those tiles for better user experience

External Links

- OpenTrack, head tracking software for MS Windows, Linux, and Apple OSX

- Kvazaar, an open-source HEVC encoder

- MP4Client, a highly configurable multimedia player available in many flavors (command-line, GUI and browser plugins)

- MP4Box, a multimedia packager which can be used for performing many manipulations on multimedia files like AVI, MPG, TS, but mostly on ISO media files

- Facebook Transform360, a video filter that transforms 360 video in equirectangular projection into a cubemap projection

- xmar/360Transformations, a tool which takes any 360-degree video in input and outputs another 360-degree video, which is mapped into another geometric projections (currently available mappings are equirectangular, cube map, rhombic dodecahedron, and pyramid)

- Oculus Video, the official Video app from Oculus

- GamingAnywhere, an open-source clouding gaming platform

- Keras, a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano

- Lucas-Kanade optical flow, the relative motions between the objects in 360° videos and the viewers

- V-PSNR, the Peak Signal-to-Noise Ratio (PSNR) for the viewer FoV (instead of the whole 360° video)

- S-PSNR, a spherical PSNR, to summarize the average quality over all possible viewports

Frequently Asked Questions

Please, can you share with me the link to download the 360° Video Viewing Dataset (MMSys’17 paper)?

Please, can you share with me the link to download the 360° Video Viewing Dataset (MMSys’17 paper)?

The dataset is available online at here.

The dataset is available online at here.

In your dataset, do you give the subjects enough space to turn around?

In your dataset, do you give the subjects enough space to turn around?

In our experiments, all subjects are told to stand and given enough space to turn around when using HMD.

In our experiments, all subjects are told to stand and given enough space to turn around when using HMD.

What is the shape of Field-of-View (FoV) you measured in the dataset paper?

What is the shape of Field-of-View (FoV) you measured in the dataset paper?

We mentioned that the HMD (Oculus Rift DK2) displays the current FoV, which is a fixed-size region, say 100×100 circle.

We mentioned that the HMD (Oculus Rift DK2) displays the current FoV, which is a fixed-size region, say 100×100 circle.

How do you generate the tiles, such as 192×192, 240×240 ,and 320×320?

How do you generate the tiles, such as 192×192, 240×240 ,and 320×320?

For all 360 videos, we divide each frame using H.264/AVC, which is mapped in equirectangular model, into 192×192 tiles, so there are 200 tiles in total. Then we number the tiles from upper-left to lower-right.

For all 360 videos, we divide each frame using H.264/AVC, which is mapped in equirectangular model, into 192×192 tiles, so there are 200 tiles in total. Then we number the tiles from upper-left to lower-right.

It is unclear why saliency map and motion map should be part of dataset itself. Can they be used to guarantee accurate timing between the dataset and origin data?

It is unclear why saliency map and motion map should be part of dataset itself. Can they be used to guarantee accurate timing between the dataset and origin data?

The image saliency map that identifies the objects attracting the viewers’ attention the most and the motion map that high-lights the moving objects. We use both content and sensor data in our proposed fixation prediction network, a tile-based 360° video streaming server.

The image saliency map that identifies the objects attracting the viewers’ attention the most and the motion map that high-lights the moving objects. We use both content and sensor data in our proposed fixation prediction network, a tile-based 360° video streaming server.

Saliency maps are computed based on equirectangular images using a classical image-based saliency mapping approach, there is no research that indicates this can be done. For CubeMap images it might not achieve same results using same saliency mapping software.

Saliency maps are computed based on equirectangular images using a classical image-based saliency mapping approach, there is no research that indicates this can be done. For CubeMap images it might not achieve same results using same saliency mapping software.

There is no research that indicates the performance of the pre-trained CNNs using saliency map based on different projection models, such as equirectangular, cube and rhombic dodecahedron. A improved projection mechanism can be designed to mitigate the shape distortion caused by current projection models of 360° videos. It can make current saliency detection techniques more applicable for 360° videos.

There is no research that indicates the performance of the pre-trained CNNs using saliency map based on different projection models, such as equirectangular, cube and rhombic dodecahedron. A improved projection mechanism can be designed to mitigate the shape distortion caused by current projection models of 360° videos. It can make current saliency detection techniques more applicable for 360° videos.

How many public 360° videos datasets in the literature and what special features they have?

How many public 360° videos datasets in the literature and what special features they have?

To our best knowledge, there are 4 360° videos datasets have been published in ACM Multimedia Systems 2107 (MMSys’17). Here is the overview of each dataset, each of them contains different features.

To our best knowledge, there are 4 360° videos datasets have been published in ACM Multimedia Systems 2107 (MMSys’17). Here is the overview of each dataset, each of them contains different features.

We need your helps and participate to improve our systems and algorithms.

Please do not hesitate to contact us by sending an email. We will respond as fast as we can. Thank you. 🙂

People

National Tsing Hua University, HsinChu, Taiwan

- Cheng-Hsin Hsu (Professor)

- Ching-Ling Fan (PhD Student)

- Wen-Chih Lo (Graduate Student)

- Shun-Huai Yao (Graduate Student)

- Shou-Cheng Yen (Graduate Student)

- Jean Lee (Undergraduate Student)

- Yi-Yun Liao (Undergraduate Student)

- Yi-Chen Hsieh (Undergraduate Student)

Academia Sinica, Taipei, Taiwan

- Kuan-Ta Chen (Professor), Academia Sinica, Taipei, Taiwan

National Chiao Tung University, HsinChu, Taiwan

- Chun-Ying Huang (Professor), National Chiao Tung University, HsinChu, Taiwan

Contact us

More details can be found here.